.webp) |

| What Is ChatGPT-4o and What Makes It Superior to Previous AI Models? |

The rapid progress of generative AI continues at a sprinting pace, and OpenAI's announcement and release of GPT-4o "Omni" promises to propel ChatGPT and its related services forward in several key areas. If you don't have access to GPT-4o already, you will soon. Let's examine what distinguishes this new AI model from its predecessors.

In this guide, we'll examine what sets OpenAI's new ChatGPT-4o model apart, its improved capabilities over previous versions, cost considerations, and how to access and use it.

GPT-4o vs. GPT-4 Turbo and GPT-3.5

|

| GPT-4o vs. GPT-4 Turbo and GPT-3.5 |

We've previously covered the differences between GPT-3.5 and GPT-4 Turbo in detail, but in summary, GPT-4 is significantly more capable than GPT-3.5. It can comprehend much more nuance, produce more accurate results, and is considerably less prone to AI hallucinations. GPT-3.5 remains relevant as it's fast, available for free, and can still handle most everyday tasks adeptly, provided you're aware of its higher likelihood of generating incorrect information.

GPT-4 Turbo had been OpenAI's flagship model until the arrival of GPT-4o. This model was exclusively available for ChatGPT Plus subscribers and offered all the advanced features OpenAI developed, such as custom GPTs and live web access.

Crucially, according to OpenAI, GPT-4o is half the cost and twice the speed of GPT-4. This efficiency gain is likely why GPT-4o is now available to both free and paid users, although paying customers will receive five times the usage limit for GPT-4o.

It's broadly just as intelligent as GPT-4 Turbo, so this advancement primarily focuses on efficiency. However, there are some obvious and not-so-obvious enhancements that extend beyond mere speed and cost improvements.

What Can GPT-4o Do?

The key term for GPT-4o is "multimodal," meaning it can operate on audio, images, video, and text. While GPT-4 Turbo also had this capability, GPT-4o's implementation is different under the hood.

OpenAI states they've trained a single neural network on all modalities simultaneously. With the previous GPT-4 Turbo, when using voice mode, it would employ one model to convert your spoken words to text. Then GPT-4 would interpret and respond to that text, which was then converted back into a synthesized AI voice.

With GPT-4o, it's a unified model, which has numerous knock-on effects on performance and abilities. OpenAI claims that the response time when conversing with GPT-4o is now only a few hundred milliseconds, approximately the same as a real-time conversation with another human. Compare this to the 3-5 second response times of older models, and it represents a significant leap.

Apart from being much more responsive, GPT-4o can now also interpret nonverbal elements of speech, such as the tone of your voice, and its own responses now have an emotional range. It can even sing! In other words, OpenAI has imbued GPT-4o with some level of affective computing capability.

The same efficiency and unification extend to text, images, and video. In one demo, GPT-4o is shown having a real-time conversation with a human via live video and audio. Much like a video chat with a person, it appears that GPT-4o can interpret what it sees through the camera and make insightful inferences. Additionally, ChatGPT-4o can retain a substantially larger number of tokens compared to previous models, allowing it to apply its intelligence to much longer conversations and larger datasets. This will likely enhance its utility for tasks like assisting with novel writing, for example.

As of this writing, not all of these features are available to the public yet, but OpenAI has stated they will roll them out in the weeks following the initial announcement and release of the core model.

How Much Does GPT-4o Cost?

GPT-4o is available to both free and paid users, although paying users will get five times the usage limit for GPT-4o. As of this writing, the cost of ChatGPT Plus remains $20/month, and if you're a developer, you'll need to consult the API costs for your specific needs, but GPT-4o should be significantly less expensive compared to other models.

How to Use GPT-4o

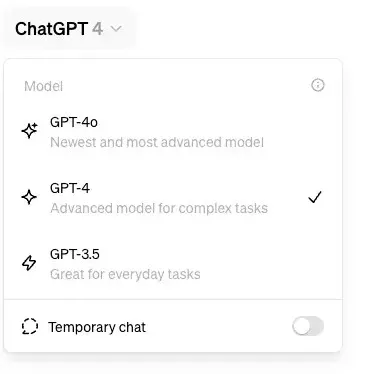

As mentioned above, GPT-4o is available to both free and paid users, but not all of its features are online immediately. So, depending on when you read this, the exact things you can do with it will vary. That said, using GPT-4o is quite straightforward. If you're using the ChatGPT website as a ChatGPT Plus subscriber, simply click the drop-down menu that displays the current model's name and select GPT-4o.

If you are a free user, you will be defaulted to ChatGPT-4o until you exhaust your allocation, at which point you'll revert to version 3.5 until you have more requests available.

As of this writing, ChatGPT-4o is not yet available in the free tier, so the above is based on OpenAI's documentation. The functionality may differ slightly when the feature rolls out to free users.

If you're using the mobile app, tap the three dots and choose the model from there.

Then, you can use ChatGPT as you normally would!

When ChatGPT-4o is released in its final form, we may finally have the conversant, natural language assistant that science fiction has long promised. Whether that's a positive or negative development is a matter of perspective, but it certainly is an exciting prospect.